Large language models (LLM) have truly democratized AI development. Software engineers can now develop many AI applications without the need for dedicated model development. These LLMs are a one-stop shop for performing a variety of natural language processing (NLP) tasks. These tasks, such as key information extraction or named entity recognition, would in the past have required dedicated NLP models. Now, with LLMs, developers can query them using natural language and the models respond with the results of the tasks. These queries, known as “prompts”, have led to a whole host of “prompt engineering” best practices.

One intriguing part of LLM behavior, is that the model’s outputs can vary based on how the input prompt is structured. Variations in input prompts leads to variations in output. This fluctuation in LLM output can strongly impact an application’s performance metrics. In order to achieve optimal performance, engineers need to fine tune the prompts given to the LLM.

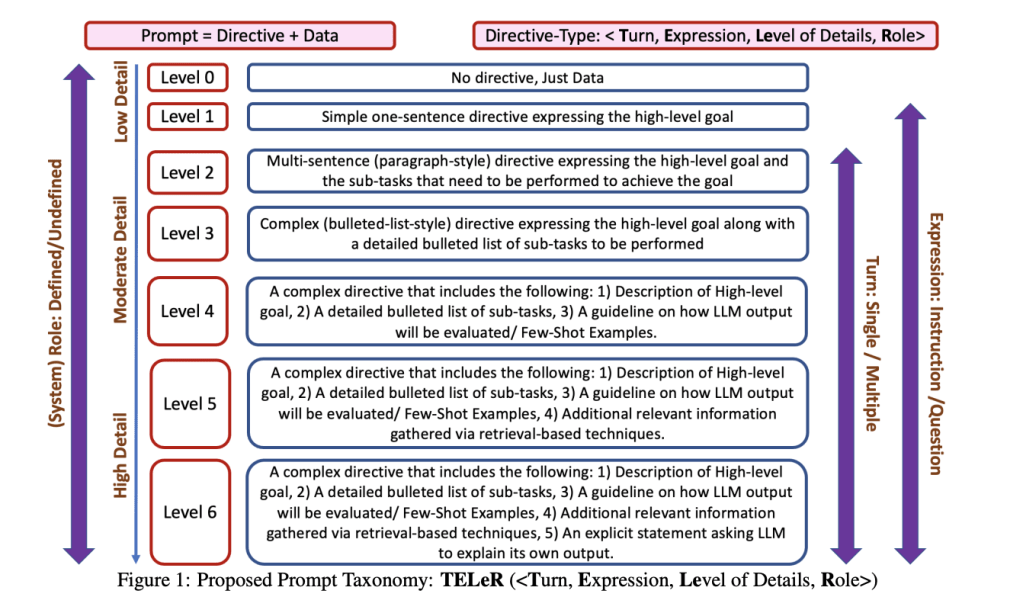

I recently came across this very useful taxonomy of prompts that can serve as a simple guide to engineers that are building generative AI applications using LLMs. This is a paper titled “TELeR: A General Taxonomy of LLM Prompts for Benchmarking Complex Tasks”.

While the motivation of the paper was to help benchmarking test suites get a standard benchmark for evaluating LLMs, the framework offered there is helpful to engineers as well. The 7 level taxonomy goes all the way from giving very little directive to the LLM to specifying lots of details in the prompt on what the user expects in the output.

Check out the paper and use the taxonomy for your next project.